Introduction: Artificial intelligence (AI) refers to the simulation of human intelligence in machines or

computer systems that are programmed to think like humans and mimic their

actions. Similarly, ChatGPT (Chat Generative Pre-trained Transformer) is an online

artificial intelligence chatbot that is trained to have human-like

conversations and generate detailed responses to queries or questions. ChatGPT became

a blockbuster and a global sensation when it was released in November 2022. According

to web traffic data from similarweb.com, OpenAI’s ChatGPT surpassed one billion page

visits in February 2023, cementing its position as the fastest-growing App

in history. In contrast, it took TikTok about nine months after its global launch to reach 100

million users, while Instagram took more than two years. Users of

ChatGPT span the world, with the United States having the highest number of

users, accounting for 15.73% of the total. India is second with 7.10%. China is

20th with 16.58%, Nigeria is 24th with 12.24% while South

Africa is 41st with 12.63%, as at March 14, 2023. OpenAI, the parent company of ChatGPT is currently valued at about $29 billion. Feedback and reactions from

academics, global

and business leaders to artificial intelligence tools like ChatGPT, is mixed. The likes of Bill Gates agree that ChatGPT can

free up time in workers lives by making employees more efficient. A research titled – ‘’Experimental Evidence on the Productivity

Effects of Generative Artificial Intelligence’’ - by two economics PhD

candidates at the Massachusetts Institute of Technology (MIT) attests that using

ChatGPT made white collar work swifter with no sacrifice in quality and then

made it easier to “improve work quickly”. Israeli president, Isaac Herzog, recently revealed that the opening

part of his speech was written by artificial intelligence software -ChatGPT.

Lately, Bill Gates and the UK Prime Minister Rishi Sunak, were reportedly grilled by AI

ChatGPT during an interview. Similarly, the United States Department of Defense

(DoD) enlisted ChatGPT to write a

press release about a new task force exploiting novel ways to forestall the

threat of unmanned aerial systems. On the flip side, Elon Musk, is of the

opinion that ‘’artificial intelligence is the real existential risk to humankind''. According to

Musk, ''artificial intelligence will

outsmart humanity and overtake human civilization in less than five years’’.

On March 29, 2023, an open letter with signatures

from a bevy of 1,000 prominent tech leaders and researchers from academic and

industrial heavyweights like Oxford, Cambridge, Stanford, Caltech, Columbia,

Google, Microsoft and Amazon, including Elon Musk, Apple co-founder Steve

Wozniak, surfaced online, urging the world’s leading artificial intelligence

labs to pause the training of new super-powerful systems for six months, saying

that recent advances in AI present “profound risks to society and humanity''. Prior to now, theoretical

physicist and one of

Britain's

pre-eminent scientists, Professor Stephen Hawking, seem to agree with Elon Musk.

He warned in in 2014 that

artificial intelligence could spell the end of the human race. In the words of

Hawking, "Once humans develop artificial intelligence it would take off on

its own, and re-design itself at an ever increasing rate’’. He went further to

asset that, ‘’Humans, who are limited by slow biological evolution, couldn't compete,

and would be superseded’’. Cybersecurity experts from the National Cyber

Security Centre (NCSC), a branch of the United Kingdom’s Spy agency, Government

Communications Headquarters (GCHQ), says artificially

intelligent chatbots like ChatGPT pose a security threat because sensitive

queries, including potentially user-identifiable information could be hacked or

leaked.

Underlying Principle And Modus Operandi of ChatGPT

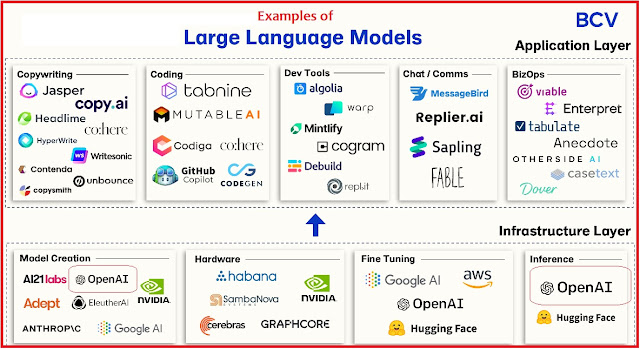

ChatGPT is essentially a large language model (LLM). A large language model, or LLM, is essentially a deep learning algorithm that can recognize, summarize, translate, predict, and generate text and other content based on knowledge gained from massive datasets. On March 14, 2023, OpenAI officially announced the launch of the large multimodal model GPT-4. A major difference between GPT-3.5 and GPT-4 is that while GPT-3.5 is a text-to-text model, GPT-4 is more of a data-to-text model. Additionally, GPT-3.5 is limited to about 3,000-word responses, while GPT-4 can generate responses of more than 25,000 words and is more multilingual. GPT-4 is 82% less likely to respond to requests for disallowed content than its predecessor and scores 40% higher on certain tests of factuality. GPT-4 is also more multilingual and will let developers decide their AI's style of tone and verbosity.

|

| Image Credit: Bain Capital Ventures |

Basically, Large Language Models (LLMs) are trained with massive amounts of data to accurately predict what word comes next in a sentence. To give an idea, ChatGPT model is said to have been trained using databases from the internet that included a massive 570GB of data sourced from books, Wikipedia, research articles, web texts, websites, other forms of content and writing on the internet. Approximately 300 billion words were reportedly fed into the system. This is equivalent to roughly 164,129 times the number of words in the entire Lord of the Rings series (including The Hobbit). Currently, ChatGPT has very limited knowledge of the world after 2021 hence is inept at answering questions about recent or real-time events. In addition to English language, ChatGPT understands 95 other languages spoken around the world including French, Spanish, German, and Chinese. Unfortunately, ChatGPT does not currently recognize any Nigerian local language. ChatGPT uses speech and text-t-speech technologies which means you can talk to it through your microphone and hear its responses with a voice. It is important to note that the ChatGPT model can become overwhelmed, generate incorrect information, repetitions or unusual combinations of words and phrases. This is because ‘’Large language models like ChatGPT are trained to generate text that is fluent and coherent, but they may not always be able to generate responses that are as nuanced or creative as those written by a human. A blog post by Dr. David Wilkinson, a lecturer at Oxford university and editor-in-chief of The Oxford Review, purports that ChatGPT appears to be making up academic references. In essence, Wilkinson counsels that just because it’s coming out of ChatGPT doesn’t mean it’s right. ‘’You need to be very careful about what you’re doing, particularly in academic circumstances, but also professional’’.

How AI-Powered ChatGPT Sparked A Chatbot Arms Race

After ChatGPT went viral, major tech companies around the world started scrambling to deploy generative artificial Intelligence. Sequel to speculations that the release of ChatGPT could disrupt and upend the search engine business, Google reportedly triggered a “code red”, summoning founders Sergey Brin and Larry Page back to the company. Soon after, Google launched an experimental AI-powered chatbot called Bard which is powered by its Language Model for Dialogue Applications (or LaMDA for short). Google is said to be utilizing artificial intelligence to improve its search features, including the popular Google Lens and the new multisearch feature. Google’s parent company – Alphabet lost $163bn in value after the search engine’s new chatbot - Bard, answered a question about the James Webb telescope incorrectly during a demo. Microsoft recently imbedded ChatGPT technology in its product suit including Microsoft 365 – Word documents, Excel spreadsheets, PowerPoint presentations, and Outlook emails. Not to be outflanked, Apple, Meta (parent company of Facebook), and Amazon plunged their generative artificial intelligence. Apart from Microsoft Bing, other artificial intelligence-powered search engines giving Google a run for its money include Wolfram Alpha, You.Com, Perplexity AI, Andi, Metaphor, Neeva, amongst others.

|

| Image Credit: Study IQ |

Artificial Intelligence (ChatGPT) And National Security Concerns

No technological innovation is without some downside, especially a nascent and evolving technology. Just recently, the United Kingdom’s intelligence, security, and cybersecurity agency, the Government Communications Headquarters, commonly known as GCHQ, warned that Artificial Intelligence powered chatbots like ChatGPT are emerging security threats. The GCHQ says companies operating the technology - like Microsoft and Google - are able to read questions typed into the chatbots. United States Congressman Ted Lieu recently wrote an opinion piece in the New York Times where he opined his enthusiasm about Artificial Intelligence (AI) and "the incredible ways it will continue to advance society", but also said he’s "very concerned about AI, particularly uncontrolled and disorganized AI". Ted ''Imagine a world where autonomous weapons would roam the streets, decisions about your life are made by AI systems that perpetuate societal biases and hackers use AI to launch devastating cyberattacks’’.

According to Steven Stalinsky, the executive director of Middle East Media Research Institute (MEMRI), ‘’it is not a question of whether terrorists will use Artificial Intelligence (AI), but of how and when’’. He noted that terrorists currently use technology, including cryptocurrency for fundraising and encryption for communications; AI for hacking and weapons systems, including drones and self-driving car bombs, bots for outreach, recruitment, and planning attacks. Stalinsky buttressed that, ‘’Jihadis, for their part, have always been early adopters of emerging technologies: Al-Qaeda leader Osama bin Laden used email to communicate his plans for the 9/11 attacks. American-born Al-Qaeda ideologue Anwar Al-Awlaki used YouTube for outreach, recruiting a generation of followers in the West. Indeed, by 2010, senior Al-Qaeda commanders were conducting highly selective recruitment of "specialist cadres with technology skills"

As a matter of fact there is growing concern that ChatGPT could make disinformation campaigns and political interference more widespread and realistic than ever. The CEO, Sam Altman of OpenAI, the company that created ChatGPT acknowledged that artificial intelligence technology will reshape society but acknowledges that it comes with real dangers such as - large-scale disinformation. It is feared that non-state actors and authoritarian regimes could exploit ChatGPT to pollute public spaces with toxic elements and further undermine citizens’ trust in democracies. To this end, Adrian Joseph, British Telecom’s chief data and artificial intelligence officer said ‘’the United Kingdom needs to invest in and support the creation of a British version of ChatGPT, BritGPT, or the country would risk national security and declining competitiveness.

The head of the FBI, Christopher Wray told a House Homeland Security Committee hearing that the Agency has "national security concerns" about TikTok, warning that the Chinese government could potentially use the popular video-sharing app to harvest data on millions of Americans thereby potentially compromising personal devices or influencing operations if they elect to do so.

Open Source Intelligence Analysis in the Age of Artificial

Intelligence - ChatGPT

The former director of the US National Geospatial-Intelligence Agency, Robert Cardillo predicted years ago that bots would soon be analyzing most of the imagery collected by satellites and replace many human analysts. According to a report, ‘’artificial intelligence and automation will plausibly perform 75 percent of the tasks currently done by the new front line of American intelligence spies – the analysts who collect, analyze, and interpret geospatial images beamed from drones, open source intelligence, reconnaissance or intelligence satellites and other feeds around the globe’’. Perhaps this is why the United States Central Intelligence Agency (CIA) is exploring using chatbots and generative artificial intelligence capabilities to assist its officers in completing day-to-day job functions and their overarching spy missions.

|

| Image Credit: TechViral |

Nevertheless, Patrick Biltgen, principal at the defense and intelligence contractor Booz Allen Hamilton is one of those who doesn’t foresee artificial intelligence or ChatGPT upstaging or putting human intelligence analysists out of jobs, at least for now. According to Biltgen, ‘’a lot of Artificial Intelligence-aided reporting today is very formulaic and not as credible as human analysis’’. He asserts that, a ChatGPT for national security analysis will have to be pre-trained “with all the intelligence reports that have ever been written, plus all of the news articles and all of Wikipedia’’. Says, “I don’t believe you can make a predictor machine, but it might be possible for a chatbot to give me a list of the most likely possible next steps that would happen as a result of his series of events.” Biltgen went further to say that ‘’on the other hand, Intelligence analysts are building upon their knowledge of what they have seen happen over time.”

Amy Zegart, a senior fellow at Stanford’s Freeman Spogli Institute for International Studies and Hoover Institution, as well as chair of the HAI Steering Committee on International Security, believes that in the new era of artificial intelligence, ''sophisticated intelligence can come from almost anywhere - armchair researchers, private technology companies, commercial satellites and ordinary citizens who livestream on Facebook''. Amy asserts that, ‘’Human intelligence will always be important, but machine learning can free up humans for tasks that they’re better at. She says, while ‘’satellites and artificial intelligence algorithms are good at counting the number of trucks on a bridge, they can’t tell you what those trucks mean. You need humans to figure out the wishes, intentions, and desires of others. The less time that human analysts spend counting trucks on a bridge. There is a vast amount of open-source data, but you need artificial intelligence to sift through it’’.

According to the MI5 Director-General, Ken McCallum, "The UK faces a broader and more complex range of threats, with the clues hidden in ever-more fragmented data’’. For instance, artificial intelligence can be used to scan, triage images, and identify dangerous weapons. In this light, British MI5 is partnering with the Alan Turing Institute aimed at ‘’applying artificial intelligence (AI) and data science to provide new insights, confront and mitigate national security challenges to the United Kingdom’’.

|

| Image Credit: The Alan Turin Institute |

ChatGPT is an incredibly powerful tool that is revolutionizing OSINT investigations. It can automate repetitive tasks, such as data collection, analyzing and extracting information from large amounts of text unstructured data from various sources, thereby making investigations more efficient and effective. This allows investigators to focus on what really matters so as to verify the information properly. For example, an investigator can automate or instruct ChatGPT to ‘’provide a list of all known aliases and social media accounts associated with the individual named [insert name]” or to ‘’extract all posts and comments made by the individual named [insert name] on the social media platform [insert platform]”. In addition, ChatGPT can be used for social network analysis, generating leads and connections between individuals and organizations. For instance, an investigator can instruct ChatGPT to ‘’find connections between individuals and organizations based on their online presence and interactions, and list them”. Google Dorking, also known as Google Hacking, is a technique used by sleuths in OSINT (Open-Source Intelligence) investigations to search for specific information on the internet using advanced search operators. This technique allows investigators to search specific parts of a website or narrowing search results to a specific file type.

Research shows that 80 percent of information processed by intelligence and analytical bodies in United Nations peace operations originate from publicly available information (PAI), or loosely – open source intelligence (OSINT), making OSINT a discipline that significantly dominates other intelligence disciplines (Nikolić 2017). For instance, by ‘’tracking and constantly monitoring social media, analysts can gain real-time insights into public opinion even in volatile environments’’.

The United Nations is exploring the deployment of artificial intelligence, particularly machine learning and natural language processing, for noble purposes of peace, security, and conflict prevention. Three ways come to mind vis-à-vis overcoming cultural and language barriers, anticipating the deeper drivers of conflict, and advancing decision making. An artificial intelligence system was recently used by the United Nations Support Mission in Libya (UNSMIL) to test support for potential policies, such as the development of a unified currency.

The Geopolitical Upshots of Artificial Intelligence, ChatGPT

ChatGPT seem to be engendering geopolitical competition between world powers. Ideally, ChatGPT should be accessible anywhere in the world with internet connectivity, but this is far from the reality. Some countries, especially authoritarian regimes such as China, Russia, Afghanistan, Iran, Venezuela, North Korea, implement censorship and surveillance to monitor internet usage and restrict the use of ChatGPT due to geopolitical and national security concerns. China leads the pack. Though not officially available in China, ChatGPT caused quite a stir there. Some users are able to access it using tools such as virtual private network (VPN) or third-party integrations into messaging apps such as WeChat to circumvent its censorship by the Chinese government. Japan's Nikkei news service reported that Chinese tech giants, Tencent and Ant Group were told not to use ChatGPT services on their platforms, either directly or indirectly because there seem to be a growing alarm in Beijing over the AI-powered chatbot’s uncensored replies to user queries. Writing on Foreign Policy, Nicholas Welch and Jordan Schneider cited a recent writeup by Zhou Ting (dean of the School of Government and Public Affairs at the Communication University of China) and Pu Cheng (a Ph.D. student) who argued that, ‘’the dangers of AI chatbots include becoming a tool in cognitive warfare, prolonging international conflicts, damaging cybersecurity, and exacerbating global digital inequality. Zhou and Pu alluded to an unverified ChatGPT conversation in which the bot justified the United States shooting down a hypothetical Chinese civilian balloon floating over U.S. airspace yet answered that China should not shoot down such a balloon originating from the United States. According to Shawn Henry, Chief Security Officer of CrowdStrike, a cybersecurity firm, "China wants to be the No. 1 superpower in the world and they have been targeting U.S. technology, U.S. personal information. They've been doing electronic espionage for several decades now''. A report from the cybersecurity company Feroot, said TikTok App can collect and transfer your data even if you've never used App. "TikTok can be present on a website in pretty much any sector in the form of TikTok pixels/trackers. The pixels transfer the data to locations around the globe, including China and Russia, often before users have a chance to accept cookies or otherwise grant consent, the Feroot report said’’. The top three EU bodies - European Parliament, European Commission, and the EU Council, the United States, Denmark, Belgium, Canada, Taiwan, Pakistan, India, Afghanistan, have all banned TikTok especially on government devices, citing cybersecurity concerns. New Zealand became the latest country on March 17 to announce the ban of TikTok on the phones of government lawmakers at the end of March 2023.

Not to be outflanked, Chinese company, Baidu is set to release its own AI-powered chatbot. Another Chinese e-commerce platform, Alibaba is reportedly testing ChatGPT-style technology. Alibaba christened its artificial intelligence language model: DAMO (Discovery, Adventure, Momentum, and Outlook). Another Chinese e-commerce says its “ChatJD” will focus on retail and finance while TikTok has a generative AI text-to-image system.

Education And Plagiarism In The Age of ChatGPT

The advent of ChatGPT unnerved some universities and academics around the world. As an illustration, a 2,000-word essay written by ChatGPT, helped a student get the passing grade in the MBA exam at the Wharton School of the University of Pennsylvania. Apart from the Wharton exam that ChatGPT passed with plausibly a B or B- grade, other advanced exams that the AI chatbot has passed so far include: all three parts of the United States medical licensing examination within a comfortable range. ChatGPT recently passed exams in four law school courses at the University of Minnesota. In total, the bot answered over 95 multiple choice questions and 12 essay questions that were blindly graded by professors. Ultimately, the professors gave ChatGPT a "low but passing grade in all four courses" approximately equivalent to a C+. ChatGPT passed a Stanford Medical School final in clinical reasoning with an overall score of 72%. ChatGPT-4 recently took other exams, including Uniform Bar Exam, Law School Admission Test (LSAT), Graduate Record Examinations (GRE), and the Advanced Placement (AP) exams. It aced aforesaid exams except English language and literature. ChatGPT may not always be a smarty-pants, it reportedly flunked the Union Public Service Commission (UPSC) ‘exam’ used by the Indian government to recruit its top-tier officials.

|

| Image Credit: YouTube |

Thus, several schools in the United States, Australia, France, India, have banned ChatGPT software and other artificial intelligence tools on school network or computers, due to concerns about plagiarism and false information. Annie Chechitelli, Chief Product Officer for Turnitin, an academic integrity service used by educators in 140 countries, submits that Artificial Intelligence plagiarism presents a new challenge. In addition, Eric Wang, vice president for AI at Turnitin asserts that, ‘’[ChatGPT] tend to write in a very, very average way’’. “Humans all have idiosyncrasies. We all deviate from average one way or another. So, we are able to build detectors that look for cases where an entire document or entire passage is uncannily average.”

Dr. LuPaulette Taylor who teaches high school English at an Oakland, California is one of the those concerned that ChatGPT could be used by students to do their homework hence undermining learning. LuPaulette who has taught for the past 42 years, listed some skills that she worries could be eroded as a result of students having access to AI programs like ChatGPT. According to her, “The critical thinking that we all need as human beings, the creativity, and also the benefit of having done something yourself and saying, ‘I did that’’.

To guard against plagiarism with ChatGPT, Turnitin recently successfully developed an AI writing detector that, in its lab, identifies 97 percent of ChatGPT and GPT3 authored writing, with a very low less than 1/100 false positive rate. Interestingly, a survey shows that teachers are actually using ChatGPT more than students. The study by the Walton Family Foundation found that within only two months of introduction, 51% of 1,000 K-12 teachers reported having used ChatGPT, with 40% using it at least once a week.

The wolf at the door: ChatGPT could make some jobs obsolete

There are existential worries

that artificial intelligence, ChatGPT will lead to career loses. According to a report

released by Goldman Sachs economists, artificial intelligence could replace up

to 300 million full-time workers around the world due to likelihood of

automation and the most at-risk professions are white-collar workers. An

earlier study released in 2018 by McKinsey Research Institute predicted

that 400 million people worldwide could be displaced as a result of artificial

intelligence before 2030. Pengcheng Shi, an

associate dean at the department of computing and information sciences,

Rochester Institute of Technology believes that the wolf is at the door and that ChatGPT will affect some careers. He affirms that the

financial sector, health care, publishing, and a number of industries are

vulnerable. According to a survey of 1,000 business leaders in the United

states by resumebuilder.com, companies currently use

ChatGPT for writing job descriptions (77 per cent), drafting interview

questions (66 per cent), responding to applicants (65 per cent), writing code

(66 per cent), writing copies/content (58 per cent), customer support (57 per

cent), creating summaries of meetings or documents (52 per cent), research (45

per cent), generating task lists (45 per cent). Within five years, 63 per cent

of business leaders say ChatGPT will “definitely” (32 per cent) or “probably”

(31 per cent) lead to workers being laid off. As a matter of fact, companies

are already reaping the rewards of deploying ChatGPT: 99 per cent of employers

using ChatGPT say they’ve saved money. When assessing candidates to hire, 92%

of business leaders say having Artificial Intelligence /chatbot experience is a

plus, and 90% say it’s beneficial if the candidate has ChatGPT-specific

experience. It follows that job seekers will need to add ChatGPT to their

skillset to make themselves more marketable in a post-ChatGPT world because as

seen throughout history, as technology evolves, workers’ skills need to evolve.

|

| Image Credit: Electrical Direct |

§ Software engineering: Now that ChatGPT can seamlessly draft codes and generate a website, any person who hitherto earned a living doing such a job should be worried. On the contrary, Professor Oded Netzer of the Columbia Business School, reckons that AI will help coders rather than replace them.

§ Journalism: Artificial Intelligence is already making inroads into newsrooms, especially in newsgathering, copy editing, summarizing, and making an article concise.

§ Legal profession: Writing under the banner: ‘’Legal Currents and Futures: ChatGPT: A Versatile Tool for Legal Professionals’’, Jeanne Eicks, J.D., associate dean for Graduate and Lifelong Learning Programs at The Colleges of Law, posits that, ‘chatbots such as ChatGPT can support legal professionals in several ways such as streamlining communication and schedule meetings with clients and other parties involved in legal cases, automating creation of legal documents such as contracts and filings, conducting legal research and searching of legal databases, analyzing data and making predictions about legal outcomes.

§ Graphic design: An artificial intelligence tool, DALL-E, can generate tailored images from user-generated prompts on command. Artificial intelligence image generators such as Stable Diffusion, WOMBO, Craiyon, Midjourney, DALL-E (owned by OpenAI, the company behind ChatGPT), use computer algorithms and artificial intelligence via deep learning and analyzing from large datasets, creating a new insanely comprehensive image based on the prompted text. These tools will pose a threat to many in the graphic and creative design spaces.

§ Customer service agents: Robots and chatbots are already doing customer service jobs – chatting and answering calls. ChatGPT and related technologies could ramp up this trend. As a matter of fact, a 2022 study from the tech research company Gartner predicted that chatbots will be the main customer service channel for roughly 25% of companies by 2027.

§ Artificial intelligence and banking: In the financial or banking world, artificial intelligence or ChatGPT can be deployed in customer service, fraud detection, wealth management, financial planning, know your customer (KYC) and anti-money laundering (AML), customer onboarding, risk management.

§

Healthcare industry:

Artificial intelligence (AI) has made significant

advancements in the healthcare industry. ChatGPT can be

deployed as a virtual assistant for telemedicine, remote patient monitoring,

medical recordkeeping and writing clinical notes, medical translation, disease surveillance, medical

education, and mental health support, identifying potential participants for clinical trials,

triaging patients by asking them questions about

their symptoms and medical history to determine the urgency and severity of

their condition. Similarly, Dr Beena Ahmed, an Associate Professor at the University of New South Wales (UNSW),

Australia, believes that

artificial intelligence (AI) and machine learning systems could be used in the

future to make predictions on specific health outcomes for individuals based on

medical data collected from large populations thereby improving life

expectancy.

§ Automobile industry: American automaker, General Motors is mulling the idea of deploying ChatGPT as a virtual personal assistant on its vehicles. American automaker, General Motors is mulling the idea of deploying ChatGPT as a virtual personal assistant on its vehicles. For instance, if a driver got a flat tire, they could ask the car to play an instructional video inside the vehicle on how to change it. Hypothetically, a diagnostic light could pop up on a car’s dashboard and a motorist will be able to dialogue with the digital assistant whether they should pull over or keep driving to deal with it the issue when they get home. It might even be able to make an appointment at a recommended repair shop.

§ ChatGPT is Revolutionizing Supply Chain Management: ChatGPT is anticipated to be a game-changer for supply chain management. The use of machine learning for supply chain management will entail: forecasting, inventory optimization, and customer service.

The implication of the foregoing is that the most resilient careers will be those that require a face to face interaction and physical skills that AI cannot replace. Hence, trades, such as plasterers, electricians, mechanics, etc., and services – everything from hairdressers to chiropodists - will continue to rely on human understanding of the task and human ability to deliver it.

Cybersecurity And The Dark Side of Artificial intelligence

§ Phishing emails: A phishing email is a type of malware wherein the attacker crafts a fraudulent, yet believable email to deceive recipients into carrying out harmful instructions. These instructions can be by clicking on an unsecured link, opening an attachment, providing sensitive information, or transferring money into specific accounts.

§ Data theft: Entails any unauthorized exfiltration and access to confidential data on a network. This includes personal details, passwords, or even software codes – which can be used by threat actors in a ransomware attack or any other malicious purpose.

§ Malware: Or Malicious software, is a broad term referring to any kind of software that intends to harm the user in some form. It can be used to infiltrate electronic devices, servers, steal information, or simply destroy data.

§ Botnets: A botnet attack is a targeted cyber-attack during which a group of devices – computers, servers, amongst others that are all connected to the internet are infiltrated and hijacked by a hacker.

The

Positive Side of ChatGPT Towards Enhancing Cybersecurity

On the flip side, ChatGPT, like other large language models, can be deployed to enhance cybersecurity. Some examples include:

§ Phishing detection: ChatGPT can be

trained to identify and flag potentially malicious emails or messages designed

to trick users into providing sensitive information.

§ Spam filtering: ChatGPT can be used to automatically identify and filter out unwanted messages and emails, such as spam or unwanted advertising.

§ Malware analysis: ChatGPT can be used to automatically analyze and classify malicious software, such as viruses and trojans.

§ Intrusion detection: ChatGPT can be used to automatically identify and flag suspicious network traffic, such as malicious IP addresses or unusual patterns of data transfer.

§ Vulnerability Assessment: ChatGPT can be used to automatically analyze software code to find and report vulnerabilities, such as buffer overflow attacks.

ChatGPT And Privacy Concerns

Data privacy: ChatGPT is unarguably a data privacy nightmare. If you’ve ever posted online, you ought to be concerned. More than 4% of employees have [in]advertently put sensitive corporate data into the large language models (LLM) such as ChatGPT, raising concerns that its popularity may result in massive leaks of proprietary information if adequate data security is not in place. This explains why some global institutions including JP Morgan, KPMG have blocked the use of ChatGPT while others like Accenture are instructing their teams to be cautious with how they use the technology because of privacy concerns. In a similar vein, British law firm with international footprint, Mishcon de Reya LLP, banned its lawyers from typing client data into ChatGPT over privacy and security fears.

Conclusion:

It is obvious that ChatGPT has far-ranging implications and ramifications for national security, open source intelligence (OSINT) gathering, education, research and on the workforce. But the most alarming ramifications of such a technological innovation will be seen in areas of disinformation, cyber-crime, because ChatGPT carries with it a tremendous risk of misuse, and this will be buoyed by paucity of regulatory framework. There’s always a fear that government involvement can slow innovation. However, I agree with the submissions of Sophie Bushwick and Madhusree Mukerjee on Scientific American where they advocated inter-alia, for regulation and oversight over the use of artificial intelligence. They contend that, ‘’overly strict regulations could stifle innovation and prevent the technology from reaching its full potential. On the other hand, insufficient regulation could lead to abuses of the technology’’. Hence, it is important to strike the right balance.

Written by:

© Don OKEREKE

Tech-savvy

security thought leader, analyst, researcher, writer, content creator, military

veteran.

Blog: http://www.donokereke.blogspot.com

Twitter: @DonOkereke

March 18, 2023; Updated 30 March

Nice one there bros DON , great article and educative site.

ReplyDelete